5.1 Core Components

5.1.1 Nodes

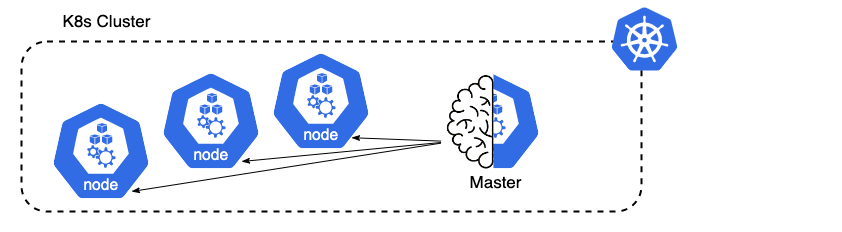

A K8s Cluster usually consistes of a set of nodes. A Node can hereby be a virtual machine (VM) in the cloud, e.g. AWS, Azure, or GCP, or a node can also be of course a physical on-premise instance. K8s distinguishes the nodes between a master node and worker nodes. The master node is basically the brain of the cluster. This is where everything is organized, handled, and managed. In comparison, a worker nodes is where the heavy lifting is happening, such as running application. Both, master and worker nodes communicate with each other via the so called kubelet. One cluster has only one master node and usually multiple worker nodes.

K8s Cluster

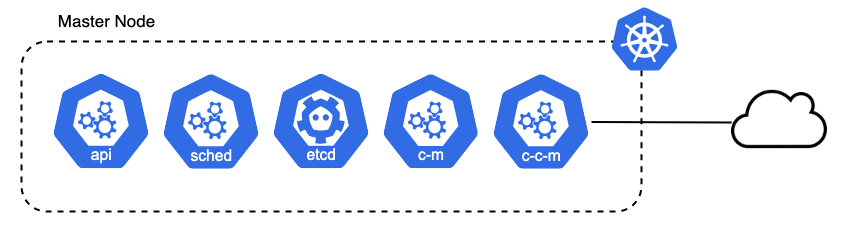

5.1.1.1 Master & Control Plane

To be able to work as the brain of the cluster, the master node contains a controll plane made of several components, each of which serves a different function.

- Scheduler

- Cluster Store

- API Server

- Controller Manager

- Cloud Controller Manager

Master Node

5.1.1.1.1 API Server

The api servers serves as the connection between the frontend and the K8s controll plane. All communications, external and interal, go through it. Frontend to Kubernetes Controll Plane. It exposes a restful api on port 443 to allow communication, as well as performes authentication and authorization checks. Whenever we perform something on the K8s cluster, e.g. using a command like kubectl apply -f <file.yaml>, we communicate with the api server (what we do here is shown in the section about pods).

5.1.1.1.2 Cluster store

The cluster store stores the configuration and state of the entire cluster. It is a distributed key-value data store and the single source of truth database of the cluster. As in the example before, whenever we apply a command like kubectl apply -f <file.yaml>, the file is stored on the cluster store to store the configuration.

5.1.1.1.3 Scheduler

The scheduler of the control plane watches for new workloads/pods and assigns them to a node based on several scheduling factors. These factors include whether a node is healthy, whether there are enough resources available, whether the port is available, or according to affinity or anti-affinity rules.

5.1.1.1.4 Controller manager

The controller manager is a daemon that manages the control loop. This means, the controller manager is basically a controller of other controllers. Each controller watches the api server for changes to their state. Whenever a current state of a controller does not match the desired state, the control manager administers the changes. These controllers are for example replicasets, endpoints, namespace, or service accounts. There is also the cloud controller manager, which is responsible to interact with the underlying cloud infrastructure.

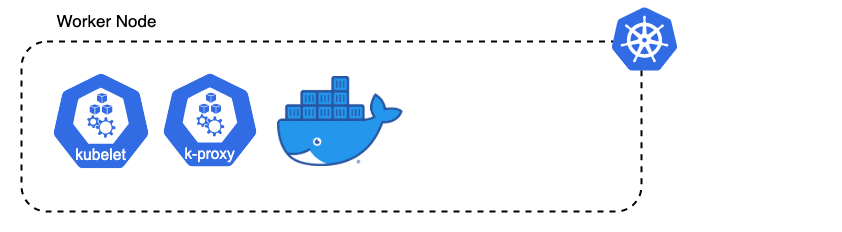

5.1.1.2 Worker Nodes

There worker nodes are the part of the cluster where the heavy lifting happens. Their VMs (or physical machines) often run linux and thus provide a suitable and running environment for each application.

Worker Node

A worker node consists of three main components.

- Kubelet

- Container runtime

- Kube proxy

5.1.1.2.1 Kubelet

The kubelet is the main agent of a worker node that runs on every single node. It receives pod definitions from the API server and interacts with the container runtime to run containers associated with the corresponding pods. The kubelet also reports node and pod state to the master node.

5.1.1.2.2 Container runtime

The container runtime is responsible to pull images from container registries, e.g. from DockerHub, or AWS ECR, as well as starting, and stoping containers. The container runtime thus abstracts container management for K8s and runs a Container Runtime Interface (CRI) within.

5.1.1.2.3 Kube-proxy

The kube-proxy runs on every node via a DaemondSet. It is responsible for network communications by maintaining network rules to allow communication to pods from inside and outside the cluster. If two pods want to talk to each other, the kube-proxy handles their communication. Each node of the cluster gets its own unique IP adress. The kube-proxy handels the local cluster networking as well as routing the network traffic to a load balanced service.

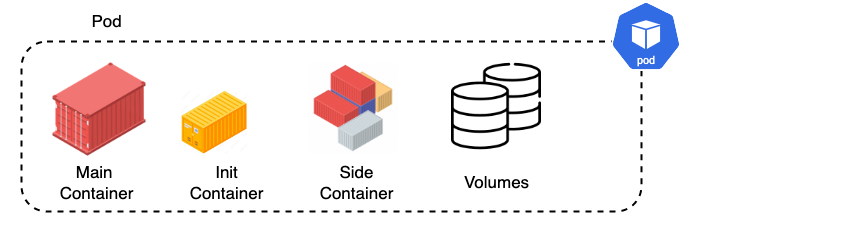

5.1.2 Pods

A pod is the smallest deployable unit in K8s (In contrast to K8s, the smallest deployable unit for docker are containers.). Therefore, a pod is a running process that runs on a clusters’ node. Within a pod, there is always one main container representing the application (in whatever language written, e.g. JS, Python, Go). There also may or may not be init containers, and/or side containers. Init containers are containers that are executed before the main container. Side containers are containers that support the main containers, e.g. a container that acts as a proxy to your main container. There may be volumes specified within a pod, which enables containers to share and store data.

Pod

The containers running within a pod communicate with each other using localhost and whatever port they expose. The port itself has a unique ip adress, which enables outward communication between pods.

The problem is that a pods does not have a long lifetime (also denoted as ephemeral) and is disposable. This suggests to never create a pod on its own within a K8s cluster and to rather use controllers instead to deploy and maintain a pods lifecycle, e.g. controllers like Deployments. In general, managing ressources in K8s is done via an imperative or declarative management.

5.1.3 Imperative & Declarative Management

Imperative management means managing the pods via a CLI and specifying all necessary parameters using it. It is good for learning, troubleshooting, and experimenting on the cluster. In contrast, the declarative approach uses a yaml file to state all necessary parameters needed for a ressource, and then using the CLI to administer the changes. The declarative approach is reproducible, which means the same configuration can be applied in different environments (prod/dev). This is best practice to use when building a cluster. As stated, this differentiation does not only hold for pods, but for all ressources within a cluster.

5.1.3.1 Imperative Management

# start a pod by specifying the pods name,

# the container image to run, and the port exposed

kubectl run <pod-name> --image="<image-name>" --port=80

# run following command to test the pod specified

# It will forward to localhost:8080

kubectl port-forward pod/<pod-name> 8080:805.1.3.2 Declarative Management / Configuration

Declarative configuration is done using a yaml format, which works on key-value pairs.

# pod.yaml

apiVersion: v1

# specify which kind of configuration this is

# lets configure a simple pod

kind: Pod

# metadata will be explained later on in more detail

metadata:

name: hello-world

labels:

name: hello-world

spec:

# remember: a pod is a selection of one or more containers

# we could therefore specify multiple containers

containers:

# specify the container name

- name: hello

# specify which container image should be pulled

image: seblum/mlops-public:cat-v1

# ressource configurations will be handled later as well

ressources:

limits:

memory: "128Mi"

cpu: "500m"

# specify the port on which the container should be exposed

# similar to the imperative approach

ports:

ContainerPorts: 80Appyl this declarative configuration using the following kubectl command via the CLI.

kubectl apply -f "file-name.yaml"

# similar to before, run following to test your pod on localhost:8080

kubectl port-forward pod/<pod-name> 8080:805.1.3.3 Kubectl

One word to interacting with the cluster using the CLI. In general, kubectl is used to interact with the K8s cluster. This allows to run and apply pod configurations such as seen before, as well as the already shown port forwarding. We can also inspect the cluster, see what ressources are running on which nodes, see their configurations, and watch their logs. A small selection of commands are shown below.

# forward the pods to localhost:8080

kubectl port-forward <ressource>/<pod-name> 8080:80

# show all pods currently running in the cluster

kubectl get pods

# delete a specific pod

kubectl delete pods <pod-name>

# delete a previously applied configuration

kubectl delete -f <file-name>

# show all instances of a specific resource running on the cluster

# (nodes, pods, deployments, statefulsets, etc)

kubectl get <resource>

# describe and show specific settings of a pods

kubectl describe pod <pod-name>